Inference 2 - Hypothesis Tests

1 Contents

This section covers a fundamental part of inference: hypothesis testing.

Tests of hypotheses are frequently applied in econometrics, e.g. t-tests for OLS parameters or in tests for heteroscedasticity.

In this section we will:

- Take a look at the general hypothesis testing procedure

- Derive hypothesis testing with a simulation

- Learn how to do hypothesis testing in R

In the previous part I assumed that we know the population. Actually – in practice – we don’t. We use hypothesis testing because we don’t much about our populations.

We actually don’t know:

- The population mean \(\mu_X\) (our parameter of interest)

- The population variance \(\sigma^2_X\) and standard deviation \(\sigma_X\)

- The true distribution of the population \(X \sim ?(?)\)

In this scenario (which is the realistic scenario) we do the following:

- Assume the population distribution and a value for our parameter of interest

- Then estimate the unknown parameters from a sample

- Test if our assumptions are reasonable

2 Formal Hypothesis Testing Procedure

Assume that the distribution of the population is normal (e.g. because it is reasonably from theory)

- Formulate a hypothesis about our population parameter (e.g. \(H_0:\mu_X = 1000\)) 1.1 Also the alternative hypothesis \(H_1: \mu_X \neq 1000\)

- Estimate the parameter

- In this case with the arithmetic mean \(\bar{X}\)

- Estimate the variance and derive the sample standard deviation \(S_X\)

- Standardise \(\bar{X}\) using our estimated \(S_X\) and the assumed \(\mu_X\)

- Calculate the probability that our observed \(\bar{X}\) is from the population, given a specific level of certainty (e.g. \(95 \%\))

3 Simulated Population

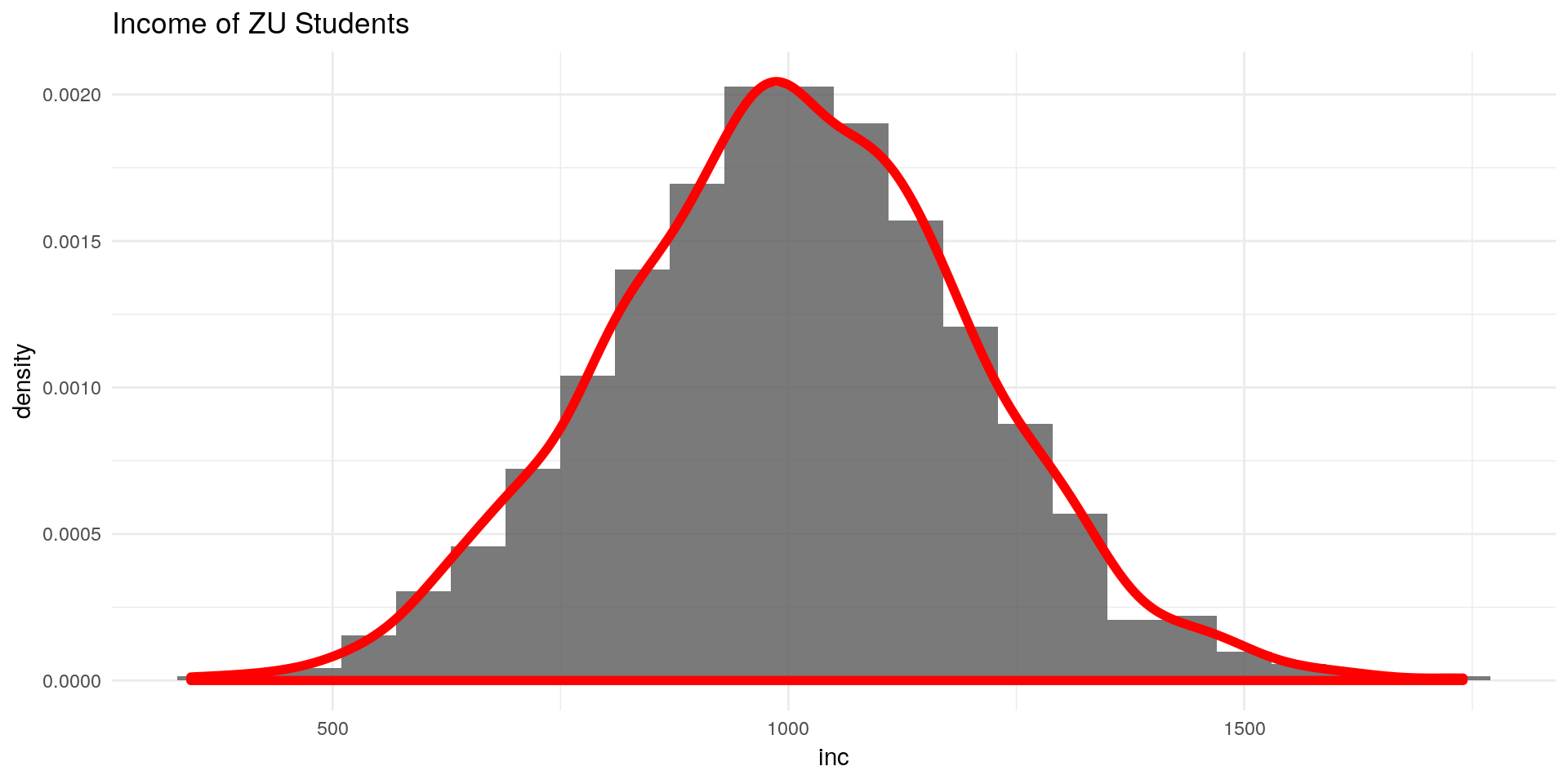

We take again our sample from a simulated population of ZU student income:

library(ggplot2)

library(tidyverse)

set.seed(11) # seed for reproducibility

n <- 1200

inc <- rnorm(n, mean = 1000, sd = 200)

ggplot() +

geom_histogram(aes(x = inc,

y = ..density..),binwidth = 60,alpha = 0.8) +

geom_density(aes(x = inc,

y = ..density..),col = "red",size = 2,alpha = 0.8) +

labs(title = "Income of ZU Students") +

theme_minimal()

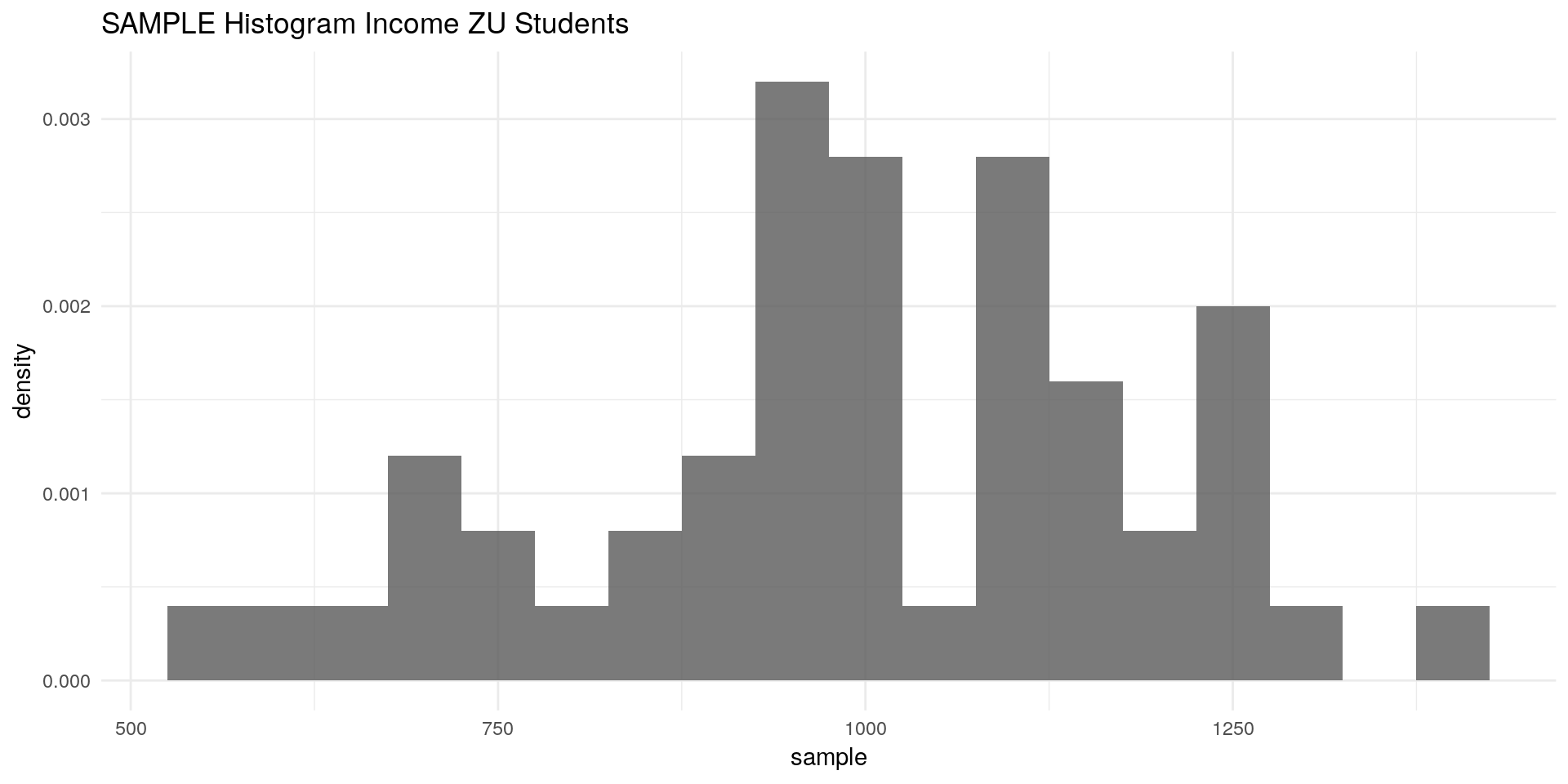

3.1 Sample

We draw a random sample with size 50 from our population:

set.seed(24)

sample_n <- 50

sample <- sample(x = inc, size = sample_n, replace = F)ggplot() +

geom_histogram(aes(x = sample,

y = ..density..),binwidth = 50,alpha = 0.8) +

labs(title = "SAMPLE Histogram Income ZU Students") +

theme_minimal()

4 Hypothesis Testing Procedure

Given the assumption that our population is distributed normally we:

4.1 Formulate the Null-Hypothesis that mean student income is less then \(940 €\)

\[ H_0 : \mu_{inc} < 940 \] Which leaves us with the alternative Hypothesis that the mean income is more or equal to \(940€\): \[ H_1: \mu_{inc} \geq 940 \]

mu_inc = 940

mu_inc## [1] 9404.2 Estimate the mean with the sample mean \(\bar{X}\)

inc_bar <- mean(sample)

inc_bar## [1] 998.39794.3 Estimate the sampling standard deviation \(S / \sqrt{n}\)

S_inc_bar <- sqrt(var(sample)/(sample_n))

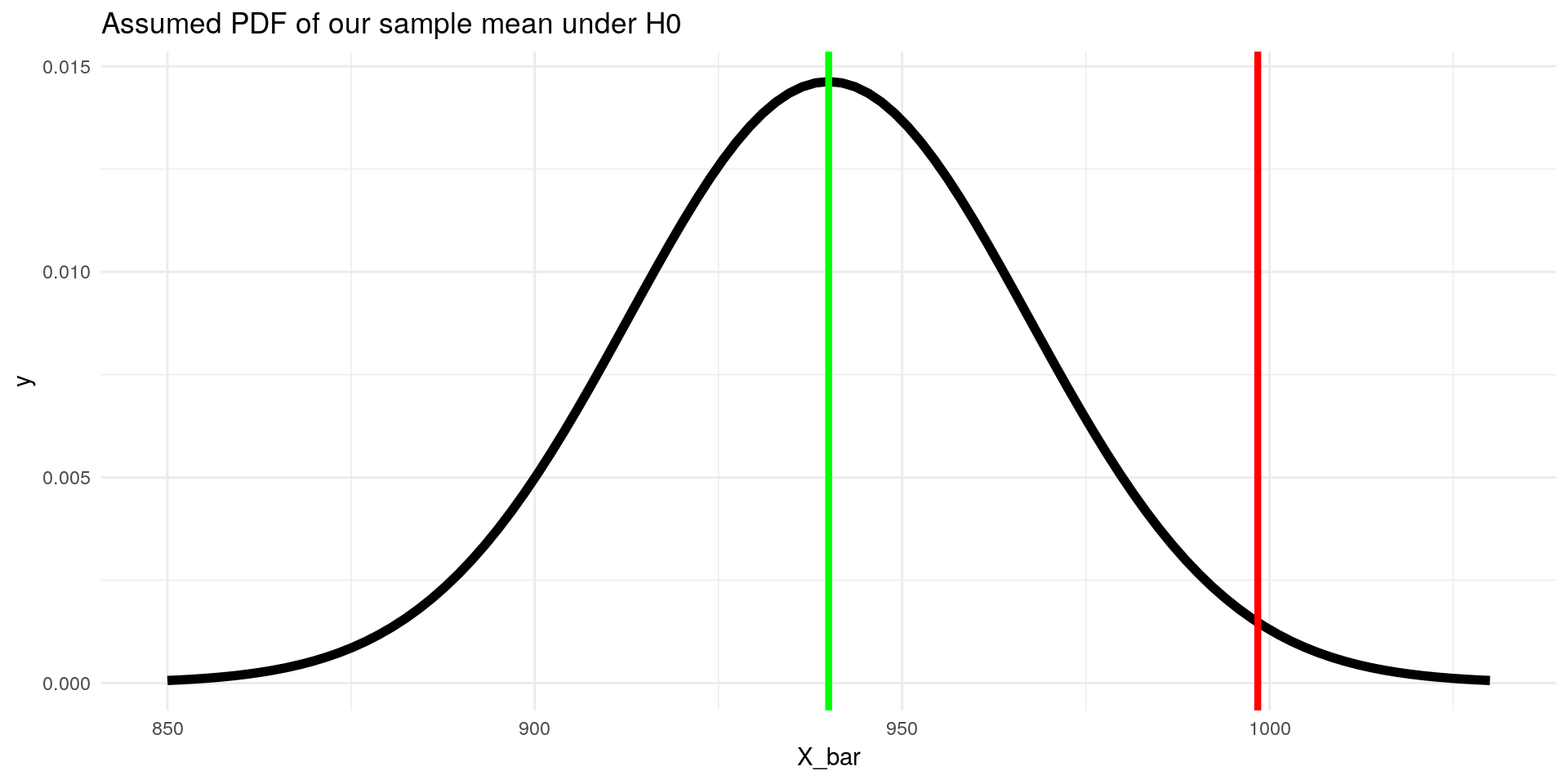

S_inc_bar## [1] 27.27298At this state we would expect our sample mean to be distributed like this:

ggplot(data = data.frame(X_bar = 850:1030), aes(x=X_bar)) +

stat_function(fun = dnorm, args = list(mean = mu_inc, sd = S_inc_bar), size = 2) +

geom_vline(xintercept = inc_bar, color = "red", size = 1.5) +

geom_vline(xintercept = mu_inc, color = "green", size = 1.5) +

labs(title = "Assumed PDF of our sample mean under H0") +

theme_minimal()

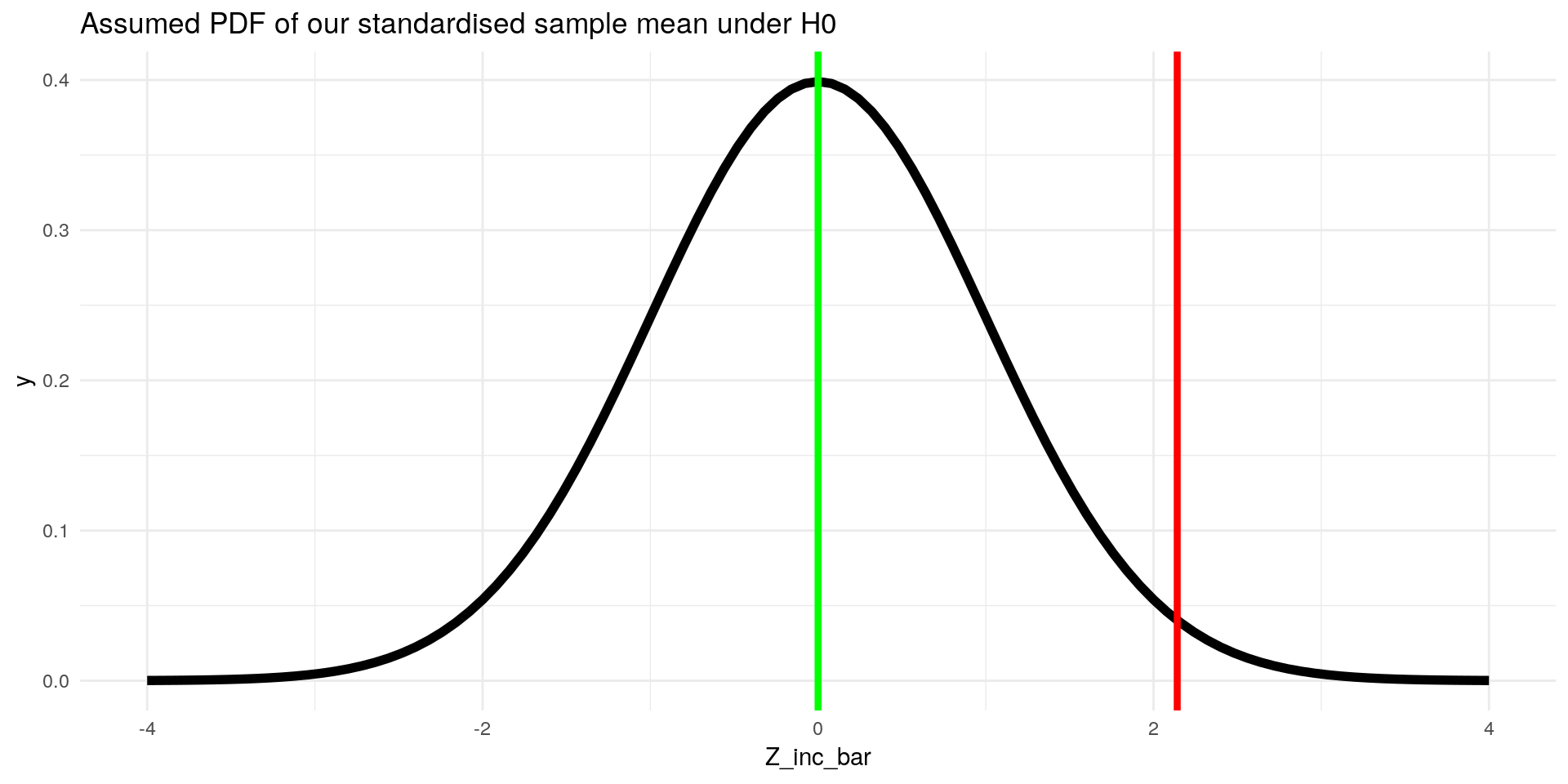

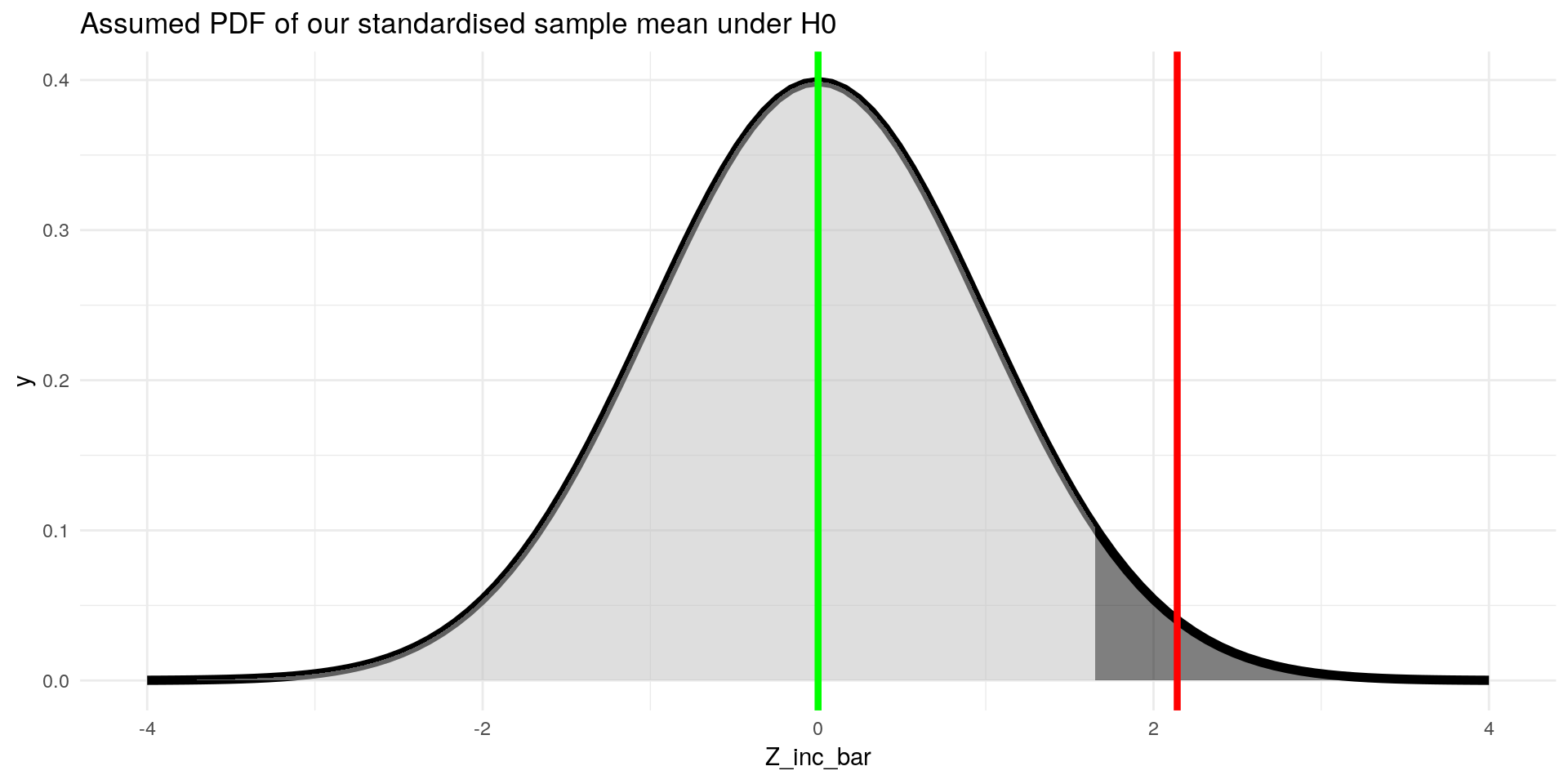

4.4 Standardise \(\bar{X}\) so we can easily calculate probabilities

Z_inc_bar <- (inc_bar - 940) / (S_inc_bar)

Z_inc_bar## [1] 2.141236Our standardised income is also called the test statistic.

ggplot(data = data.frame(Z_inc_bar = -4:4), aes(x=Z_inc_bar)) +

stat_function(fun = dnorm, args = list(mean = 0, sd = 1), size = 2) +

geom_vline(xintercept = Z_inc_bar, color = "red", size = 1.5) +

geom_vline(xintercept = 0, color = "green", size = 1.5) +

labs(title = "Assumed PDF of our standardised sample mean under H0") +

theme_minimal()

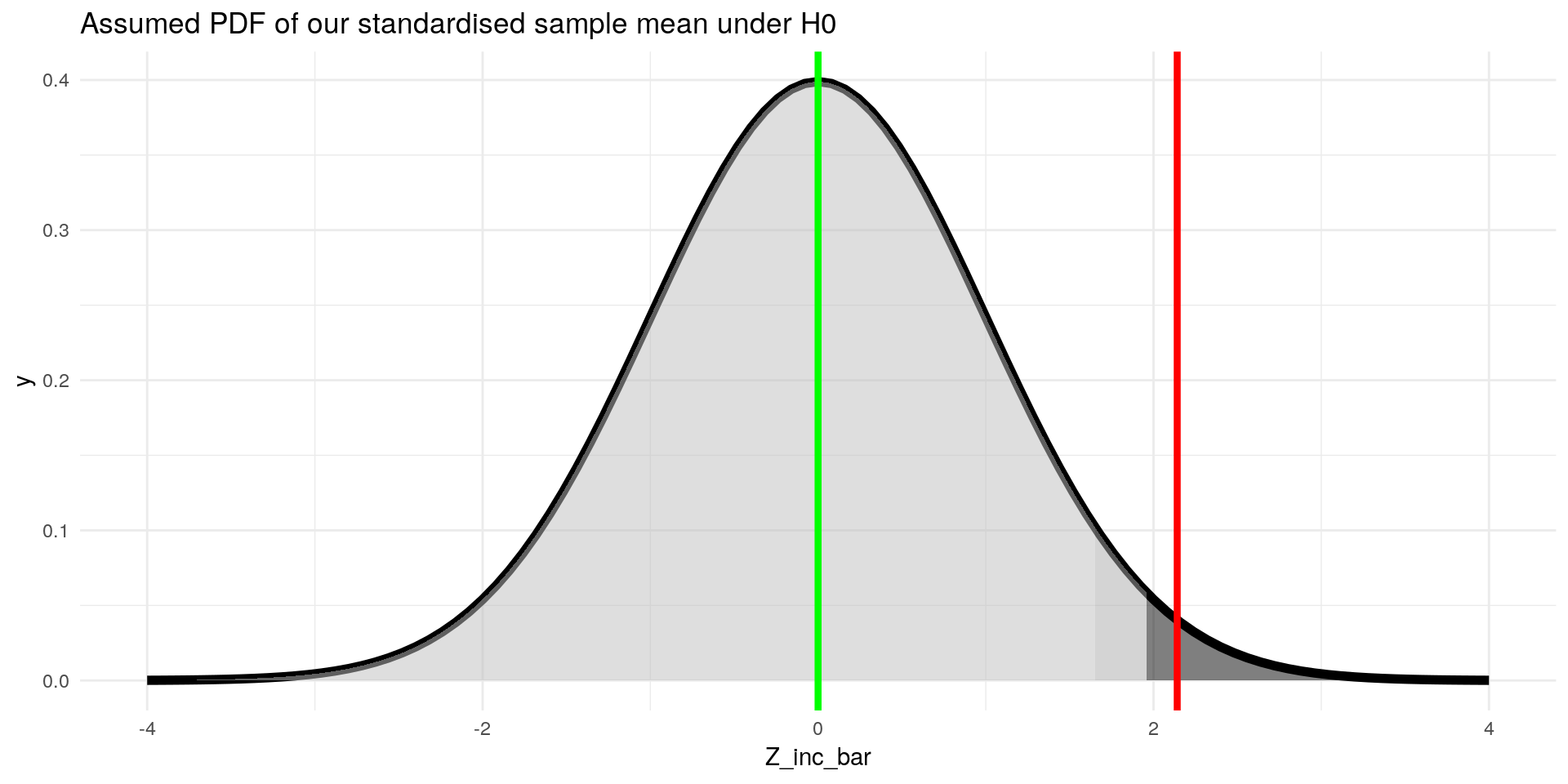

4.5 Calculate probability of \(\bar{X}\) occuring at 5% significance level

Look up the critical \(z-value\) for \(1-5\% = 95\%\), e.g. in this table.

\[ c = z_{95 \%} \approx 1.65 \]

Or in R:

qnorm(0.95)## [1] 1.644854Lets visualise this:

ggplot(data = data.frame(Z_inc_bar = -4:4), aes(x=Z_inc_bar)) +

stat_function(fun = dnorm, args = list(mean = 0, sd = 1), size = 2) +

stat_function(fun = dnorm, xlim = c(-4,1.65), geom = "area", fill = "grey", alpha=0.5) +

stat_function(fun = dnorm, xlim = c(1.65,4), geom = "area", fill = "black", alpha=0.5) +

geom_vline(xintercept = Z_inc_bar, color = "red", size = 1.5) +

geom_vline(xintercept = 0, color = "green", size = 1.5) +

labs(title = "Assumed PDF of our standardised sample mean under H0") +

theme_minimal()

4.5.1 Hypothesis Test:

Check whether our standardised estimator \(Z_{\bar{inc}}\) is larger than our critical value \(c\).

- Critical value \(c\): quantile of the standard normal cdf with \(P(z > c) = 95 \%\)

- Test statistic: our standardised estimator \(Z_{\bar{inc}}\)

Remember our \(H_0: \mu_{inc} < 940€\) and \(H_1: \mu_{inc} \geq 940€\). Lets test that in R:

Z_inc_bar >= qnorm(0.95)## [1] TRUEWe now can make the following statements:

- If the \(H_0\) is true, samples with a \(Z_\bar{inc}\) in the dark grey area only occur \(5\%\) of the time

- We call that area the rejection region

- We showed that our observed sample mean (in standardised form) falls into the rejection region

- In conclusion, we have some evidence against our initial assumption that mean student income is at most \(940 €\)

- This means that we can be optimistic that our sample is not one of the \(5 \%\) of outliers in the world of \(H_0\), but that the \(H_0\) is wrong

Generally we can make the statement: “we can reject the null hypothesis that mean ZU student income is less than \(940€\) at a significance level of \(5\%\)”

4.5.2 Hypothesis Test - Other Possible Variants

What we did was only the right-sided hypothesis test.

We could also test other hypotheses:

- Left-sided: \(H_0: \mu_{inc} > 940€\) and \(H_1: \mu_{inc} \leq 940€\)

- Test: \(\mu_{inc} \leq c\)

- Both-sided: \(H_0: \mu_{inc} = 940€\) and \(H_1: \mu_{inc} \neq 940€\)

- Test :\(|\mu_{inc}| > c\)

4.6 P-Values

So called p-values are often used and reported in statistical work. They indicate the highest level of significance at which we can reject the \(H_0\).

In our example, we could not only reject the \(H_0\) at the \(5\%\) level, but also at the \(2.5\%\) level:

ggplot(data = data.frame(Z_inc_bar = -4:4), aes(x=Z_inc_bar)) +

stat_function(fun = dnorm, args = list(mean = 0, sd = 1), size = 2) +

stat_function(fun = dnorm, xlim = c(-4,1.65), geom = "area", fill = "grey", alpha=0.5) +

stat_function(fun = dnorm, xlim = c(1.65,1.96), geom = "area", fill = "darkgrey", alpha=0.5) +

stat_function(fun = dnorm, xlim = c(1.96,4), geom = "area", fill = "black", alpha=0.5) +

geom_vline(xintercept = Z_inc_bar, color = "red", size = 1.5) +

geom_vline(xintercept = 0, color = "green", size = 1.5) +

labs(title = "Assumed PDF of our standardised sample mean under H0") +

theme_minimal()

But at most, we could reject it at the level of our test-statistic. So we just need to look up the corresponding value for \(Z_\bar{inc}\) in the z- table or with r:

1- pnorm(Z_inc_bar)## [1] 0.01612751This is our p-value. It tells us that we can reject the \(H_0\) at most at a significance level of \(\approx 1.6 \%\).

5 T-Test Function in R

In R we can conduct this procedure with the t.test function:

t.test(sample, mu = 940, alternative = "greater")##

## One Sample t-test

##

## data: sample

## t = 2.1412, df = 49, p-value = 0.01863

## alternative hypothesis: true mean is greater than 940

## 95 percent confidence interval:

## 952.6733 Inf

## sample estimates:

## mean of x

## 998.3979The different p-value is due to the fact that t.test used the Students t-distribution instead of the standard normal distribution. We used the standard normal for ease of explanation, but actually our test statistic is distributed in the Student-t way.

If we calculate our p-value for the Student-t distribution, we get the same result:

1- pt(Z_inc_bar, 49) # degrees of freedom = sample size -1 ## [1] 0.01862729For large samples (\(n > 120\)) the t-distribution and the standard normal distribution are almost equivalent.